What is the difference between crawling and indexing in SEO

Crawling vs. Indexing

When researching SEO, chances are that you’ve come across the terms crawling and indexing in SEO. Crawling and indexing are among the most commonly used terms in search engine optimization. But what is the difference between these two terms?

Googlebot uses an algorithmic process to determine which sites to crawl, how often, and how many pages to fetch from each site.

Search engines work through three primary functions:

- Crawling: Scour the Internet for content, looking over the code/content for each URL they find.

- Indexing: Store and organize the content found during the crawling process. Once a page is in the index, it’s in the running to be displayed as a result of relevant queries.

- Ranking: Provide the pieces of content that best answer a searcher’s query. This means that results are ordered from most relevant to least relevant.

We’ll be focussing on crawling and indexing with Google as our point of reference for search engines.

What is crawling?

Crawling or spidering is the discovery process in which search engines send out robots known as crawlers or spiders- Googlebots- to find new and updated content.

There isn’t a central registry of all web pages, so Google must constantly look for new and updated pages and add them to its list of known pages. This process is called “URL discovery.” Some pages are known because Google has already visited them. Other pages are discovered when Google follows a link from a known page to a new page.

Googlebot starts out by fetching a few web pages. It then follows the links on those web pages to find new URLs. The crawler can discover new content and add it to their index by hopping along this link path.

Caffeine, a massive database of discovered URLs, retrieves results when a searcher seeks information on the URL’s content.

During this procedure, search engine bots will either accept and index or reject all pages granting crawling permission, based on whether your website appears spam.

Tell search engines how to crawl your site.

Googlebot doesn’t crawl all the pages it discovered. Some pages may be disallowed for crawling by the site owner. Others may not be accessed without logging in to the site, and other pages may be duplicates of previously crawled pages.

For crawling to be possible, your site must allow Googlebot to go through its link. This is affected by the robot.txt file on a website.

Robots.txt files suggest which parts of your site search engines should and shouldn’t crawl, as well as the speed at which they crawl your site, via specific robots.txt directives.

Googlebot will proceed to crawl your site if it can’t read any robot.text files. Web admins use robot.txt files to direct bots on which URLs are okay for crawling and which are not.

Discretion while crawling

Using robot.txt files to block crawlers from going through and potentially indexing private pages like administration sign-in pages is common practice. However, placing these pages in publicly accessed robot.txt files can invite bad actors to interact with the data.

For this reason, it is better to NoIndex these pages and protect them behind a login form rather than place them in your robots.txt file.

Optimize for crawl budget

A crawl budget is the average number of URLs Googlebot will crawl on your site before leaving. Crawl budget optimization ensures that Googlebot isn’t wasting time crawling through unimportant pages and ignoring important pages.

Submit a sitemap

A sitemap is the easiest and fastest way to notify search engines that your website exists on the web or you have updated certain pages on it. A sitemap can be submitted via the Google Search Console.

Search engines go through billions of websites, and a sitemap will nudge a search engine to look in the direction of your website and crawl it.

Site navigation

Crawlers need a path of links on your site to guide it from page to page. Your website’s structure should be so that a crawler can go from one page to another from a root page(usually the homepage). Many sites make the critical mistake of structuring their navigation in inaccessible ways to search engines, hindering their ability to get listed in search results.

What is Indexing?

After crawling comes indexing. When Google and other search engines index your pages, it indicates that they are ready to appear in search results. It does not, however, guarantee that your website will rank well. Even if you have indexed pages, you may not appear on page one in search results.

Factors affecting indexing

Indexing is storing and organizing the information found on the pages. The bot renders the code on the page the same way a browser does. It catalogs all the content, links, and metadata on the page.

Proper SEO strategies

Although you Google to crawl your site, indexing is the most important bit. This is where SEO strategies come into play. If your site is up to search engine standards, your site will be indexed and ready to be ranked on the SERPs.

Google, in particular, is extremely strict regarding SEO practices, and if you want to rank well, you need to put plenty of effort into content creation and optimization.

Duplicate content

Google indexes new, fresh content that they believe will improve their users’ experience. Writing content that already exists on another site or having www and non-www pages with the same information could affect indexing. Google wants to provide the most relevant content, and duplicate content might confuse the algorithm on what content should be indexed. It might even lead to penalties.

Keep your web pages in one location.

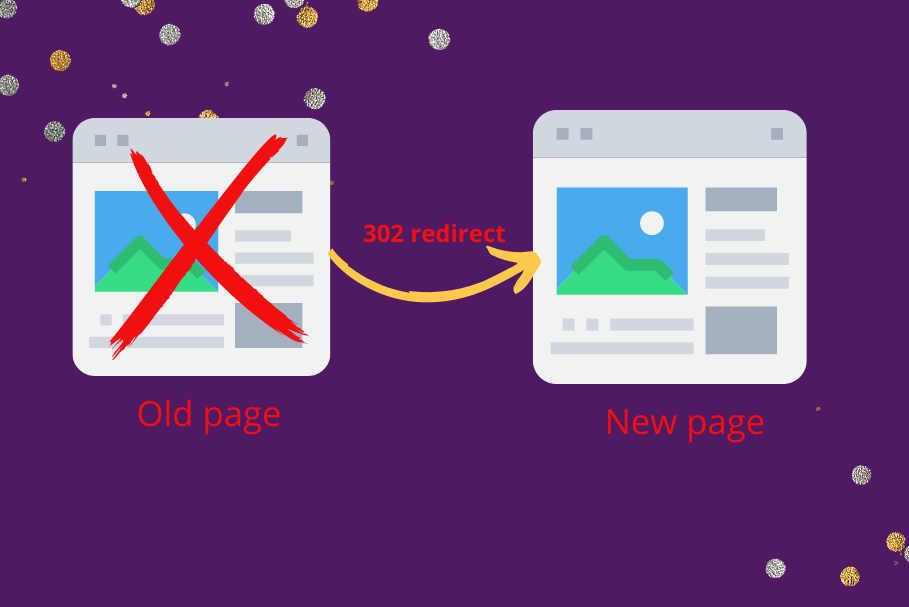

Avoid changing the location of web pages. If you must move a web page to a different location, meaning it will have a different URL, use a 301 redirect.

Other redirects include:

- 4xx redirects when the page does not exist. Typos can cause these errors in the URL. Error 404 is one such type of redirect. You can customize your 404 page by adding links to important pages on your site that users can go to instead.

- 302 error page for temporarily moving webpages. They can be used where passing link equity is not a concern.

Too many redirects can make it difficult for Googlebot to crawl and index your website. Limit your redirect chains as much as you can; A recommended way is to get rid of middle-men redirects if you have them.

How do I know if my site is indexed?

Google search

You can see if Google has indexed your website with the command “site:” — a search operator for searching specific websites on Google. Enter this into Google’s search box to see all the pages they have indexed on your website:

site:yourdomain.com/posts

Google search console

Search console provides a sea of valuable data on your website’s statistics, such as search ranking performance.

You can view the performance of your customers by location or get a sense of their interaction behavior with your site.

You can also submit a sitemap through the search console so Google can crawl your site and index any updates.

Ranking

The final step in this series is ranking. If your web pages fulfill the required SEO guidelines provided by search engines, then your sites will be indexed. Ranking is when a search engine determines which content should come up in a search and the order it should follow based on relevance.

The relevance of the web page’s content is determined by algorithms within the search engine, like Google’s RankBrain. RankBrain is machine learning and constantly improves predictions through observation and training data.

This means that a site ranking lower on SERPs but containing useful information might be bumped up above other websites.

Information is power

Knowing the difference between crawling and indexing in SEO is important because it helps you understand the relationship between your website and Google.

It will help you understand Google’s site analysis data better when you know what terms mean.

In the case of working with other bloggers, information on these two processes will help you make informed decisions on working together. Bloggers offer backlinks without non-follow instructions and knowing the difference is important.

Summary

To summarize, crawling is simply search engines going through the web to find web pages. When they find the relevant pages based on key factors, they are added to the search engine’s list of searchable links. This is known as indexing. Ranking is the order in which websites providing related content appear on the search engine.

We hope that the information provided in this guide clears up the mystery surrounding these terms.