How often does google update search results?

Introduction

Wondering how often Google updates its search results? Do you have a new website yet to appear in a google search despite being updated? Or have you made changes to the content on your site, but it doesn’t reflect in a google search?

Google is constantly working around the clock to ensure that it provides fresh and up-to-date information to its users.

The question of how often Google updates its search results cannot be unequivocally answered. Several subjective factors go into this process. The process typically takes between 4 days to 4 weeks or more for your site to appear in a search result or for changes to reflect. Some websites have reported noticing changes within only a few hours.

How does Google find my site?

To answer these questions, we’ll delve a little deeper into the technicals of Google search, its bot and algorithm, and how they function. First, we’ll define the terms.

Googlebot

Google follows a crawling and indexing process to find and add it to search results using its crawling software, Googlebot.

Googlebot is Google’s search software that collects information from websites on the web. These pages are evaluated and analyzed for changes, freshness, and relevance per the Google algorithm.

Crawling

Googlebot and other search engines find content to display on searching by scouring the whole web to find relevant content. Crawling is the process by which bots search the web. Bots go from website to website, inspecting content according to Google’s guidelines on SEO and report back to Google.

This process is continuous. There are billions of websites and web pages to be crawled, and the process may seem slow if it takes a while to get to your content.

Indexing

After the bots collect information, it is processed, and adjustments are made to that page and added to Google’s searchable index. These pages are then ranked depending on the quality of content detected by the bot.

How long does it take Google to find and update my site?

Crawling and indexing are subjective and controlled by Google’s algorithm. An algorithm is a computer program that decides which sites to crawl, how often to crawl them, and how many pages to fetch from each crawled site for indexing.

How often Google crawls your site depends on a few factors:

Backlinks

These are links from other websites that lead to your site. Having links that lead to your content prompts google bots to search your site when they get to its URL. High-quality links will do you a world of good. Do not pay sites for backlinks because it goes against Google’s guidelines and could incur penalties.

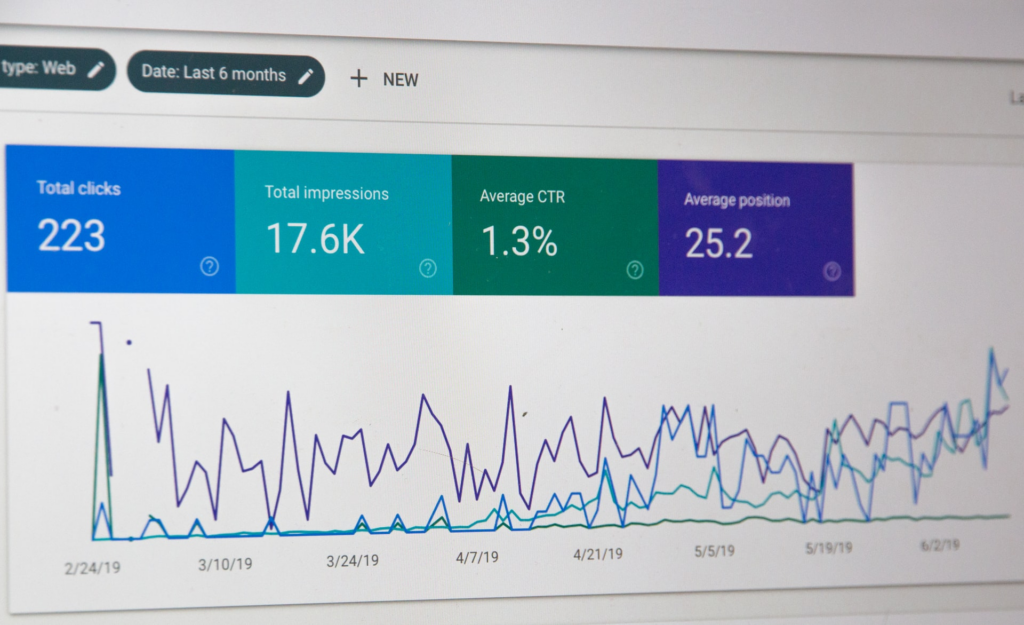

Analytical performance

A site with high traffic, click-throughs, and increased engagement triggers Google’s algorithm to analyze them more frequently than slower sites. Higher performance leads Google to trust your content and index it more often.

Domain authority

Domain Authority predicts how well a website ranks on searches. It is a 100-point score developed by Moz.

Device adaptability

Google is known to favor websites that are adaptable to any screen size. Google checks for fast loading speeds, ease of view on different screen types, and mobile editing.

Content

More significant sites that produce a lot of content- approximately two or three 1500-word articles per day- get priority over smaller sites. If yours is a local website with a lower search volume, expect slower results.

Popularity

A site’s popularity depends on the traffic flow, click-through rates (CRT), and engagement with the site’s content. Google crawls Websites with higher stats in terms of organic traffic flow, CRTs, and engagement at higher rates than their less popular counterparts.

Crawlability and structure

The structure of your website may make it hard for google bots to crawl your site. Websites that use technology other than HTML may make it hard for bots to go through them and function properly.

New site crawling and indexing

If your site is brand new and you’re still not appearing in searches, fret not. It typically takes Googlebot a few days or a few weeks after the launch of your website to crawl it and report back to Google to add it to its searchable index. This process is continuous, and the bot will get to your site.

You can also take the initiative to fast-track the process by:

Making high-quality content

Serve high-quality content to users. Your number one priority is ensuring that users have the best possible experience. Make your site unique, valuable, and engaging. Keep up-to-date with Google’s guidelines on content SEO. maintaining high-quality web content directly affects how often Googlebot will crawl your site and update its search results.

Multi-device adaptability

Most people are searching on mobile. As discussed earlier in this article, your website’s ability to adapt to different screen sizes and still perform optimally is a deciding factor for the algorithm to send crawlers to your site or not.

Optimize your web pages to load quickly and display properly on all screen sizes. Google favors mobile-friendly websites. Google is more likely to crawl your website and update search results if your site is adaptable to mobile devices as well as computer screens.

Website security

Users nowadays expect the sites they visit to be secure. Paying attention to Cyber security and ensuring your website is secure may improve the rate at which bots crawl your site.

Add analytics

Analytics is good for tracking your website performance. It paints a picture of what you’re doing right and provides insight into what can be improved. Adding analytics to your website data is good because it could also alert Google that a new website is ready to be indexed. Once updates are indexed, Google updates its search results.

Use the Google Search console.

Google Search Console (GSC) is a must-have tool for website stat management. It is a free Google tool that allows you to monitor your site’s status in Google’s index and search results. The search console enables you to submit a new website for faster indexing.

Submit a sitemap

A website sitemap is a map of your website. It lays out all of the content of your website to help Googlebot discover the information you think is vital to your site, updates, and the frequency of the updates. You can submit your sitemap on the Google Search Console dashboard.

Sometimes when crawling, Google may miss your sites. Crawlers go through billions of web content at any time, so this is not an uncommon scenario. Here are some reasons Googlebot might have skipped your site.

- No links are pointing back to your site from other sites on the web. Backlinks play a massive role in visibility, and a lack of them may cause Google to overlook your site altogether. That said, Do not pay for backlinks.

- The site design makes it difficult for Google bots to crawl its content effectively.

- Web pages return an error while bots try to crawl. This may stem from login pages, or the site blocks Google. Check your site by ensuring it can be in incognito mode of a web browser.

Re-indexing for edited content

If you’ve removed or edited some of your web content, but it still appears in the original format, that means crawlers have not yet made their way to your new content, and Google needs to crawl it again. Sometimes, even after crawling, content may stay the same. This may be the case if:

- For deleted content, Google believes the deletion is an error not intended, and the URL will be back

- Many pages linked to the URL

- Google has not been allowed to crawl the URL due to the robots.txt configuration.

Robots.txt are files webmasters create to instruct web crawlers such as Googlebot on how to crawl pages on their websites. The robots.txt file is a part of the robots exclusion protocol (REP). These crawl instructions are specified by “allowing” or “disallowing” the behavior of user agents (web crawling software.)

To make sure Google is de-indexing deleted pages as fast as possible, check if:

- Page responds with a Status 404 cod error or a 410 error.

- Make sure the robots.txt file is not blocking the URL

- Don’t link to the URL internally anymore

- Manually submit the URL for de-indexing in Google Search Console

- If you delete a lot of URLs at a time, consider using a sitemap to help Google understand currently active URLs.

How does SEO help?

SEO- Search Engine Optimization- is the practice of employing calculated strategies to your website and content, so it is discovered faster by search engine crawlers and ranked on search engine results pages (preferably the first page)

Optimization makes it easier for Googlebot to find your website and rank you higher. Optimization comes in two forms; optimizing your website and optimizing your website’s content. For more information on SEO, please read our article on SEO and how to do it yourself.

Conclusion

We cannot definitively answer how often Google updates its search results because they are based on several subjective factors. Some sites get crawled and indexed hourly, while others take days and weeks.

The frequency of search results updates for highly relevant, timely, high-quality sites appears near real-time. These sites may be high-traffic news sites like CNN or FOX.

Practice makes perfect. Constantly educating yourself on SEO and its changing dynamics and applying these seemingly little changes to your site and its content can help Google direct its bots your way. If your site takes a while to get noticed; another gets noticed sooner, don’t panic. Carefully review your stats following the broken-down guide provided in this article to see if the problem lies on your end. If not, keep making amazing content, and Google will notice it.